Liran Silbermann, Leo AI Marketing

Feb 12, 2026

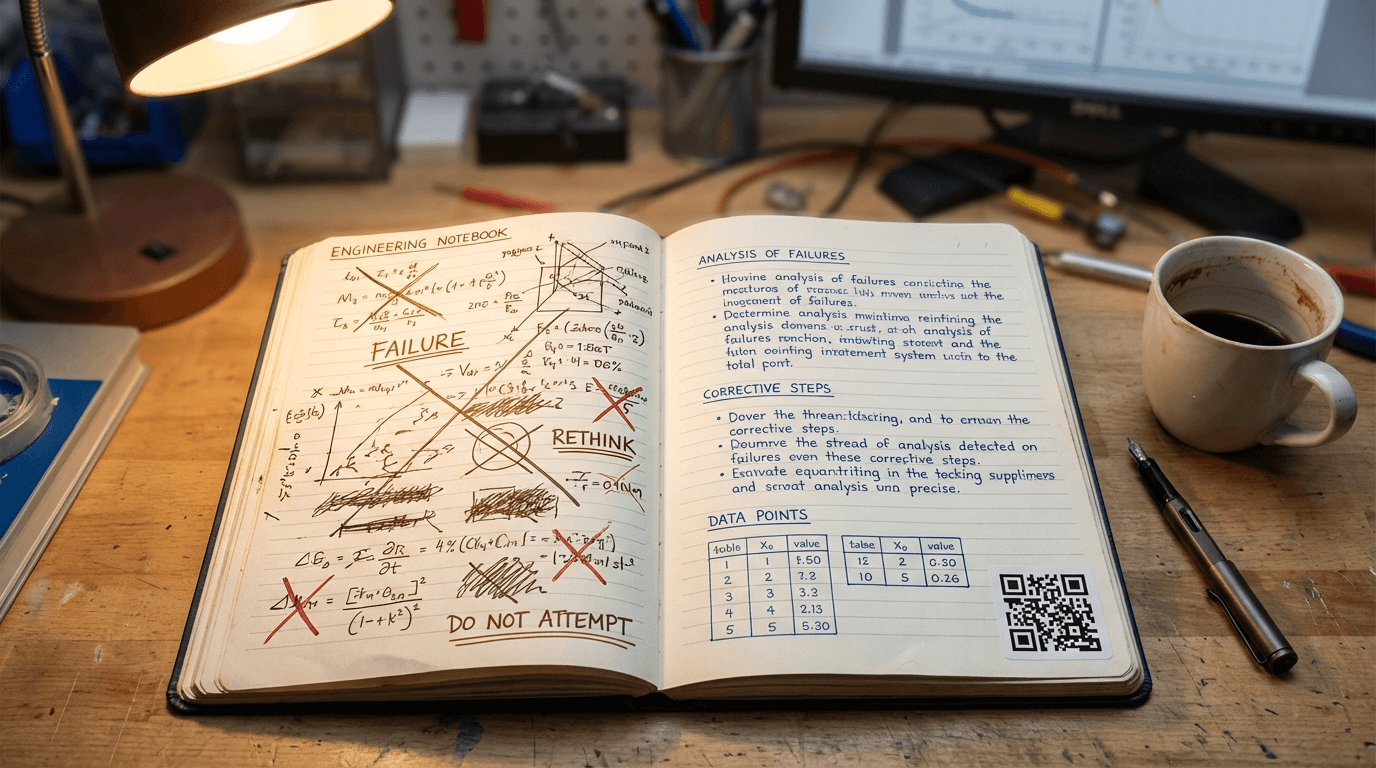

$400K and 6 months wasted, because nobody remembered that approach had already failed in 2019.

A hardware company redesigned a cooling system component using a specific fin geometry. The design looked good. Simulations passed. Prototypes were built. Testing revealed the same heat distribution problem that had killed an identical approach three years earlier. The engineer who knew why it wouldn't work had retired 18 months ago. The knowledge left with him.

According to research from the American Society of Mechanical Engineers, 30-40% of design errors in hardware engineering are "known problems" that have been solved before. Teams repeat the same mistakes because failure knowledge isn't captured, isn't accessible when needed, or patterns aren't recognized across projects.

For a 50-person engineering team, repeated design mistakes cost $500K-$1.5M annually in wasted design time, prototyping costs, testing cycles, and market delays.

This article shows you how to build a systematic approach to preventing repeated mistakes using AI-powered pattern recognition and proactive failure knowledge management.

The Cost of Repeated Design Mistakes

Let's break down what "repeated mistakes" actually cost beyond the obvious rework.

Design Time Waste

Typical scenario: Engineer spends 3-6 weeks on a design approach that was already tried and failed.

Cost per incident:

Design time: 120-240 hours × $85/hour = $10,200-$20,400

Senior engineer review time: 20-40 hours × $120/hour = $2,400-$4,800

Total wasted design effort: $12,600-$25,200 per incident

Annual impact: Even conservative estimate of 10 repeated mistakes per year = $126,000-$252,000

Prototyping and Testing Costs

Typical scenario: Design proceeds to prototype before failure mode is discovered.

Cost per incident:

Prototype fabrication: $5,000-$50,000 (depending on complexity)

Testing setup and execution: $8,000-$25,000

Failure analysis: $3,000-$10,000

Total wasted prototyping: $16,000-$85,000 per incident

Annual impact: 5-8 incidents reaching prototype stage = $80,000-$680,000

Market Delay Costs

Typical scenario: Repeated mistake discovered late in development cycle, forcing redesign and delaying product launch.

Cost per incident:

3-6 month market delay

Lost first-mover advantage

Competitor gains market share

Revenue delay on $10M product = $2.5M-$5M per quarter delayed

Annual impact: Even one major product delay = $2.5M-$5M opportunity cost

Customer Impact and Field Failures

Worst case scenario: Repeated mistake makes it to production and causes field failures.

Cost per incident:

Warranty claims and repairs: $50,000-$500,000

Recall costs (if severe): $1M-$10M+

Reputation damage: Incalculable

Liability exposure: Potentially catastrophic

Annual impact: Even avoiding one field failure = $50,000-$500,000+ saved

Total annual cost of repeated design mistakes for 50-person team: $2.75M-$6.43M across all categories. The ROI of prevention is massive.

Why Companies Keep Making the Same Mistakes

Understanding root causes is essential to building effective solutions.

Reason 1: Failure Knowledge Isn't Captured

The problem: Engineering culture has success bias. Teams document what works, not what fails.

When a design approach fails, the typical response is:

Try something different immediately

Move on quickly to avoid dwelling on failure

Maybe mention it in a design review

Hope someone remembers next time

What doesn't happen:

Systematic documentation of what was tried

Analysis of why it failed

Clear guidance on what to avoid

Searchable record for future reference

Result: Failure knowledge lives only in the heads of the engineers who experienced it. When they leave or forget, the knowledge is gone.

Reason 2: Knowledge Isn't Accessible When Needed

The problem: Even when failures are documented, finding that information when you need it is nearly impossible.

Where failure knowledge might exist:

Buried in email threads from 2-3 years ago

In someone's personal notes or OneNote

Mentioned briefly in a design review presentation

In PDM vault comments that nobody reads

In the head of an engineer who's now at a different company

Result: An engineer starting a new design has no practical way to discover that a similar approach failed before. The knowledge exists but is effectively lost.

Reason 3: Patterns Aren't Recognized

The problem: Repeated mistakes often aren't identical. They're similar patterns applied in slightly different contexts.

Example:

2019: Fin geometry A with material X failed heat distribution testing

2023: Fin geometry B (90% similar) with material Y encounters same failure mode

Human engineer doesn't recognize the pattern because details are different

Same fundamental problem, different surface appearance

Result: Pattern-based failures repeat because humans can't effectively search their memory across thousands of past designs to find similar approaches.

Reason 4: No Systematic Review of Past Failures

The problem: Most companies don't have a "lessons learned" process that actually works.

What typically happens:

Post-mortem meetings after major failures

Action items assigned and forgotten

Knowledge stays in meeting notes nobody reads

No mechanism to surface relevant lessons during future design work

Result: Learning from failures requires proactive effort that never happens in the rush of daily work.

Reason 5: Organizational Amnesia

The problem: According to Pew Research, 10,000 baby boomers retire daily in the US. The average engineer stays at a company 4.2 years.

Turnover impact:

Senior engineer with 15 years of failure knowledge retires

Knowledge of what doesn't work leaves with him

New engineers have no access to that accumulated wisdom

Same mistakes happen again within 2-3 years

Result: Companies lose 15-20% of their engineering knowledge annually. Failure knowledge is the first to go because it's the least formally documented.

What Are The Types of Design Mistakes Worth Preventing?

Not all mistakes are equal. Focus prevention efforts on high-impact categories.

Category 1: Material and Geometry Combinations

What this includes:

Material pairings that fail compatibility testing

Geometry configurations that cause stress concentration

Coating and substrate combinations that delaminate

Material treatments that affect tolerances

Example: Medical device company discovered that specific sterilization method with certain plastic caused material degradation. Cost: $1.2M and 8 months. Leo AI now flags any similar material-sterilization combinations instantly.

Prevention value: High. These failures are predictable and pattern-based.

Category 2: Assembly Sequences and Manufacturing Constraints

What this includes:

Assembly sequences that work in CAD but fail on production line

Part orientations that prevent access for fasteners or welds

Tolerance stackups that cause assembly issues

Design for manufacturing violations

Example: Aerospace manufacturer designed complex sheet metal assembly that couldn't be welded in the designed sequence. Discovered during first production run. Redesign cost: $180K and 6 weeks delay.

Prevention value: Very high. Manufacturing constraints are known and repeatable.

Category 3: Vendor and Supplier Issues

What this includes:

Vendors with quality problems on specific components

Lead times that always exceed quotes

Material sourcing issues for particular grades

Supplier capability limitations

Example: Hardware team specified vendor A for custom extrusion. Vendor had failed to meet tolerances on three previous projects. Knowledge wasn't accessible. Same quality issues repeated. Cost: $75K in rejected parts and 4-week delay.

Prevention value: High. Vendor performance history is objective and searchable.

Category 4: Requirements and Constraint Violations

What this includes:

Designs that violate industry standards or certifications

Approaches that fail regulatory requirements

Constraint combinations that cause downstream problems

Design patterns that violate company standards

Example: Engineer designed mechanism that worked perfectly but violated UL certification requirements. Discovered during certification review. Redesign cost: $95K and 8-week delay.

Prevention value: Very high. Requirements are known upfront.

Category 5: Proven-to-Fail Design Approaches

What this includes:

Design concepts that were prototyped and failed testing

Analytical approaches that produced incorrect results

Optimization strategies that led to dead ends

Innovative ideas that didn't work in practice

Example: Team explored novel bearing design for 4 months. Prototype testing showed fundamental flaw. Same concept was explored and rejected 5 years earlier. Cost: $120K in wasted engineering time.

Prevention value: Extremely high. These are the most frustrating repeats.

Building a Design Mistake Prevention System

A systematic four-step framework for capturing, accessing, and applying failure knowledge.

Step 1: Capture Failure Knowledge Systematically

Move from ad-hoc to systematic failure documentation.

What to capture:

Design approach that was tried

Why it was attempted (what problem it aimed to solve)

How it failed (what went wrong)

Root cause analysis (why it failed fundamentally)

What was done instead (successful alternative)

Decision rationale (why alternative works)

How to capture it:

Manual approach:

Lessons-learned template in PDM system

Required field in design review documentation

Dedicated "design failures" database

Regular capture sessions with senior engineers

Automated approach:

AI system learns from design changes and revisions

PDM vault data automatically indexed

Email discussions about failed approaches captured

Design review recordings transcribed and indexed

Pattern recognition identifies similar failures across projects

Key principle: Capture during work, not after. When failure happens, document it immediately before moving to the solution. The 10 minutes invested saves months later.

Step 2: Make Failure Knowledge Accessible

Transform captured knowledge into actionable intelligence.

Requirements for accessibility:

Searchable by design context, not just keywords

Embedded in workflow (alerts during design, not separate system)

Visual pattern matching (similar geometries flagged automatically)

Natural language queries ("has anyone tried this approach before?")

Technology solutions:

Traditional approach:

SharePoint or Confluence database of lessons learned

PDM vault search of past projects

Email archive search

Ask senior engineers directly

AI-powered approach:

Semantic search understands engineering intent, not just words

Pattern recognition matches similar designs even with different details

Proactive alerts during design work ("similar approach failed in 2019")

Instant answers to "what happens if I..." questions

Integration directly in SOLIDWORKS workflow

Comparison:

Capability | Traditional | AI-Powered |

Search time | 15-45 minutes | <30 seconds |

Pattern recognition | Manual (if happens) | Automatic |

Proactive alerts | None | Real-time |

Context understanding | Keyword-based | Semantic |

Availability | Business hours only | 24/7 |

Scaling | Requires human experts | Unlimited |

Step 3: Implement Proactive Alerting

Don't wait for engineers to search. Bring knowledge to them.

Real-time checks during design work:

Geometry-based alerts:

"This fin geometry is similar to Design ABC123 which failed heat distribution testing"

"This tolerance stackup caused assembly issues on Project XYZ"

"This sheet metal bend radius failed formability testing previously"

Material-based alerts:

"Material combination X+Y failed compatibility testing in 2020"

"This coating process caused delamination on similar substrate"

"Vendor A has 40% defect rate on this component type"

Constraint-based alerts:

"This mate combination typically causes circular reference errors"

"Similar assembly sequence was unfabricatable in Production Project DEF"

"This approach violates UL Standard 60950 based on past certification"

Implementation:

Rule-based system:

Define specific rules based on known failures

Check new designs against rule database

Alert when violations detected

Requires manual rule creation and maintenance

AI-based system:

Learns patterns from historical failures automatically

Recognizes similar approaches even when details differ

Improves accuracy over time as more data added

No manual rule creation required

Example: Leo AI watches SOLIDWORKS work in real-time. When engineer creates geometry similar to a past failure, alert appears: "Similar cooling channel design failed pressure testing on Project Titan (2021). Issue: insufficient wall thickness at junction points. Recommended minimum: 3.2mm. Current design: 2.8mm. See full analysis: [link]"

Step 4: Continuous Learning and Improvement

Build organizational memory that gets smarter over time.

How learning compounds:

Month 1-3: System captures current project failures and indexes existing design history

Month 4-6: Pattern recognition begins identifying similar failures across different projects

Month 7-12: Proactive alerts prevent first repeated mistakes; team sees measurable value

Year 2: Knowledge base comprehensive enough to catch 60-70% of potential repeated mistakes

Year 3: System becomes primary design validation tool; prevents mistakes before they happen

Year 5: Organizational failure knowledge is competitive advantage; new engineers access 20+ years of lessons learned instantly

Feedback loops that improve the system:

Track which alerts were helpful vs. false positives

Capture new failures that weren't previously in system

Update patterns as design approaches evolve

Cross-pollinate knowledge between different product lines

Learn from near-misses, not just actual failures

Technology Solutions for Mistake Prevention

Option 1: Design Rule Checking (DRC) Systems

What they do: Automated checking of designs against defined rules and standards.

Strengths:

Good for known, well-defined rules

Fast execution during design work

Clear pass/fail criteria

Industry-standard for certain domains

Limitations:

Only catches violations of explicitly programmed rules

Doesn't learn from failures automatically

Can't recognize pattern-based issues

High maintenance overhead as rules multiply

Best for: Compliance checking, standard violations, quantitative constraints

Cost: $10K-$50K per seat for specialized DRC tools

Option 2: PLM Systems with Lessons-Learned Modules

What they do: Structured capture and retrieval of project lessons and design decisions.

Strengths:

Integrated with existing design data

Formal process for capturing lessons

Searchable database of past issues

Audit trail and traceability

Limitations:

Requires manual documentation effort

Search is keyword-based, not semantic

Knowledge not surfaced proactively during design

Low adoption due to extra work required

Best for: Formal lessons-learned process, compliance documentation, post-mortem analysis

Cost: Included in most PLM systems; value depends on adoption

Option 3: AI-Powered Design Assistants

What they do: Learn from design history automatically and provide proactive guidance during design work.

Strengths:

Automatic knowledge capture (no manual documentation)

Semantic understanding of design intent

Proactive alerts during design, not just search

Pattern recognition across thousands of designs

Continuous learning and improvement

Integrates directly into SOLIDWORKS workflow

Limitations:

Requires 2-4 weeks initial indexing

Accuracy improves over time (not perfect immediately)

Needs connection to PDM, email, and design data

Best for: Preventing repeated mistakes, accelerating learning, preserving tribal knowledge

Cost: $200-$400 per seat per month; ROI typically 10-20x

Example: Leo AI analyzes your complete design history, learns failure patterns, and provides instant alerts when current work resembles past failures. One medical device company prevented $1.2M failure by catching material compatibility issue flagged by AI on day 3 of design phase.

Real-World Prevention Examples

Medical Device Case: Material Compatibility

Company: Surgical instrument manufacturer, 85 engineers

Problem: Specific biocompatible plastic with specific sterilization method caused material degradation. Discovered during validation testing after 8 months of development. Cost: $1.2M and market launch delay.

Previous occurrence: Same material-sterilization combination had failed 4 years earlier on different product line. Knowledge existed in retired engineer's head and buried email thread.

Solution implemented: Leo AI indexed all past testing data and material specifications. System trained to flag biocompatibility concerns.

Result: New product design using similar material flagged on day 3. Engineer reviewed past failure, selected proven alternative material immediately. Prevented repeat of $1.2M mistake.

ROI: System paid for itself with first prevented failure.

Aerospace Case: Assembly Sequence Validation

Company: Aircraft component manufacturer, 140 engineers

Problem: Complex sheet metal assembly designed in SOLIDWORKS assembled perfectly in CAD. Production discovered welding sequence impossible due to access constraints. Required complete redesign. Cost: $180K and 6-week delay.

Previous occurrence: Similar assembly approach had failed on two previous programs for identical reason.

Solution implemented: AI system analyzed assembly sequences from all past projects. Pattern recognition identified access-constrained designs.

Result: New design flagged during design phase: "Assembly sequence similar to Project Falcon (2019) and Project Eagle (2021). Both required redesign due to weld access constraints. Recommend DFM review before proceeding."

Impact: DFM review in design phase identified issue. Corrected before any fabrication. Saved $180K and prevented 6-week delay.

Automotive Case: Constraint Pattern Recognition

Company: Electric vehicle components, 95 engineers

Problem: Specific combination of SOLIDWORKS mates in drive train assembly caused circular reference errors that took senior engineers days to debug. Happened repeatedly across different projects because pattern wasn't obvious.

Solution implemented: AI learned the problematic constraint pattern from historical projects where issue occurred.

Result: New assembly using similar mate logic flagged immediately: "This constraint pattern caused circular reference errors in 7 previous assemblies. Recommended approach: [alternative mate strategy]. See examples: Project A, Project D, Project J."

Impact: Junior engineer avoided 3-day debugging nightmare. Applied proven alternative approach immediately. Senior engineer time saved: 12 hours.

Scaling impact: Pattern recognition prevented same issue 23 times in first year across different engineers and projects.

ROI of Mistake Prevention

Reduced Design Iterations

Baseline: 35% of design revisions due to repeated mistakes

With prevention: 60% reduction in mistake-driven revisions

Impact for 50-person team:

500 design revisions per year × 35% mistake-driven = 175 revisions

175 × 60% prevented = 105 revisions avoided

105 revisions × 40 hours average = 4,200 hours saved

4,200 hours × $85/hour = $357,000 annual value

Faster Time to Market

Baseline: 15% of product delays due to late-discovered design issues

With prevention: 70% of preventable delays caught in design phase

Impact:

10 products per year × 15% delayed = 1.5 product delays

Average delay: 8 weeks

Average revenue impact: $2M per quarter delayed

1.5 delays × $2M × 70% prevented = $2.1M opportunity value protected

Lower Prototyping Costs

Baseline: 5-8 prototypes built annually that fail due to repeated design mistakes

With prevention: 75% caught before prototyping

Impact:

6.5 average prototypes × $35K average cost = $227.5K

× 75% prevented = $170,625 direct cost savings

Improved Quality and Reduced Field Failures

Baseline: 2-3 field issues per year traceable to repeated design mistakes

With prevention: 80% prevented through early detection

Impact:

2.5 average field issues × $150K average cost = $375K

× 80% prevented = $300,000 quality cost savings

Competitive Advantage

Unmeasurable but valuable:

Faster design cycles create first-mover advantage

Better quality builds reputation

Knowledge accumulation creates moat

New engineers productive faster with access to failure knowledge

Total Annual Value

Conservative estimate for 50-person team:

Design iteration savings: $357,000

Time-to-market value: $2,100,000

Prototyping savings: $170,625

Quality savings: $300,000

Total: $2,927,625 annual value

Investment required: $150,000-$250,000 (AI system, process changes, training)

ROI: 12-20x in first year

Implementation Roadmap

Phase 1: Assessment and Cataloging (Month 1)

Week 1-2: Identify known failures

Interview senior engineers about repeated mistakes they've seen

Review past project post-mortems and lessons learned

Search email and PDM for mentions of failed approaches

Categorize by type (material, geometry, assembly, vendor, etc.)

Week 3-4: Create initial knowledge base

Document top 20-30 known failure patterns

Capture what failed, why, and what works instead

Organize by searchability (tags, categories, metadata)

Validate with engineering leadership

Deliverable: Initial failure knowledge catalog with 20-30 entries

Phase 2: System Implementation (Months 2-3)

Week 5-6: Technology deployment

Select and deploy AI-powered mistake prevention system

Connect to PDM, PLM, email, and collaboration tools

Begin automatic indexing of design history

Configure proactive alerting parameters

Week 7-8: Pilot with core team

Select 10-15 engineers for pilot

Train on system usage and failure capture process

Monitor alerts and refine pattern recognition

Gather feedback and adjust

Week 9-12: Knowledge base enhancement

Review pilot results and identify gaps

Add failure patterns discovered during pilot

Improve alert accuracy based on feedback

Expand pilot to 30-40 engineers

Deliverable: Functioning system with 50+ failure patterns indexed

Phase 3: Full Rollout (Months 4-6)

Week 13-16: Enterprise deployment

Roll out to all engineers

Provide training and documentation

Establish ongoing failure capture process

Set up measurement and reporting

Week 17-20: Process integration

Integrate failure capture into design review process

Make "lessons learned" part of project closeout

Establish KPIs and tracking dashboard

Recognize early adopters and success stories

Week 21-24: Optimization

Analyze prevention effectiveness

Calculate ROI and communicate results

Identify additional failure categories to capture

Plan continuous improvement initiatives

Deliverable: Full deployment with measurable prevention results

Phase 4: Continuous Improvement (Ongoing)

Monthly:

Review new failures and add to knowledge base

Analyze alert effectiveness (helpful vs. false positives)

Track prevention metrics

Share success stories with team

Quarterly:

Calculate ROI and cost savings

Update failure patterns based on new data

Cross-pollinate knowledge between product lines

Refine proactive alerting logic

Annually:

Comprehensive system review

Benchmark against industry standards

Expand to adjacent use cases (supplier quality, manufacturing)

Strategic planning for knowledge expansion

Measuring Prevention Success

Leading Indicators (Track Weekly/Monthly)

System usage:

% of engineers actively using prevention system (target: >80%)

Questions asked about past failures (target: increasing first 6 months)

Alerts reviewed and acted upon (target: >70% actionable)

Knowledge coverage:

Failure patterns captured and indexed (target: 100+ after 6 months)

Design domains covered (target: all major product lines)

Historical depth (target: 3+ years of design history indexed)

Lagging Indicators (Track Quarterly/Annually)

Prevention effectiveness:

Design revisions due to repeated mistakes (target: 60% reduction)

Prototypes failing due to known issues (target: 75% reduction)

Late-stage design changes from preventable causes (target: 70% reduction)

Business impact:

Cost of repeated mistakes (target: <$200K annually vs. $2M+ baseline)

Product delays from design issues (target: <5% vs. 15% baseline)

Field failures from known problems (target: <1 annually vs. 2-3 baseline)

Knowledge accumulation:

Total failure patterns in system (target: growing 20%+ annually)

Cross-project knowledge sharing instances (target: measurable increase)

New engineer time to learn failure knowledge (target: <1 month vs. 12-18 months)

Success benchmark: 60%+ reduction in repeated mistakes, $2M+ annual value for 50-person team, 80%+ engineer adoption, 100+ failure patterns captured within 12 months.

Common Implementation Challenges and Solutions

Challenge 1: Engineers Resist Documenting Failures

Why it happens: Feels like admitting mistakes publicly; takes extra time; not measured or rewarded

Solution:

Use AI to capture automatically from existing work (PDM, email, reviews)

Frame as "learning organization" culture, not blame

Celebrate failure knowledge sharing in team meetings

Make it 2-minute process, not 30-minute documentation effort

Include in performance reviews (positive credit for sharing)

Challenge 2: Too Many False Positive Alerts

Why it happens: AI learning curve; overly broad pattern matching; insufficient context

Solution:

Start with high-confidence patterns only

Refine based on feedback (engineers mark alerts helpful/not helpful)

Improve over 3-6 months as AI learns

Better to have some false positives than miss critical failures

Allow engineers to dismiss with reason (system learns from this)

Challenge 3: Knowledge Base Becomes Overwhelming

Why it happens: Hundreds of failure patterns create noise; hard to find relevant ones

Solution:

AI-powered relevance ranking (show most relevant to current work)

Contextual filtering (only show patterns related to current design domain)

Search and alerting, not browsing (knowledge comes to you)

Regular pruning of outdated or no-longer-relevant patterns

Challenge 4: Slow Adoption by Senior Engineers

Why it happens: "I already know this stuff"; prefer asking colleagues; skeptical of AI

Solution:

Show value for onboarding and mentoring junior engineers

Demonstrate cross-domain knowledge (failures from other product lines)

Highlight time savings from not answering same questions repeatedly

Get early adopter champions among senior engineers

Make it valuable for them, not just junior engineers

Challenge 5: System Doesn't Know About Specific Failure

Why it happens: Not all failures documented; recent issues not yet indexed; edge cases

Solution:

Easy "add failure pattern" interface for engineers

Continuous indexing of new projects and emails

Encourage reporting of near-misses, not just actual failures

Accept that system won't catch everything (80/20 rule applies)

Combine AI system with human expertise, not replace it

Your Next Steps

Preventing repeated design mistakes isn't about perfection. It's about systematically reducing the 30-40% of errors that are predictable and preventable.

The companies that build organizational memory around failure knowledge will design better products faster, spend less on prototyping and testing, and avoid costly field failures.

Start here:

Catalog your known failures - Interview senior engineers about repeated mistakes

Calculate your cost - How much do repeated mistakes cost annually?

Pilot AI prevention - Start with one product line or team

Measure and expand - Track prevention and scale based on results

The technology exists today. The question is whether your competitors will implement it first and gain the knowledge advantage.